Caching: Because Sometimes, We All Need a Shortcut!

Picture this, you're at a busy coffee shop, craving your favorite cup of coffee. But there's a line snaking around the counter, and patience isn't your strongest suit. You need your caffeine fix, and you need it now.

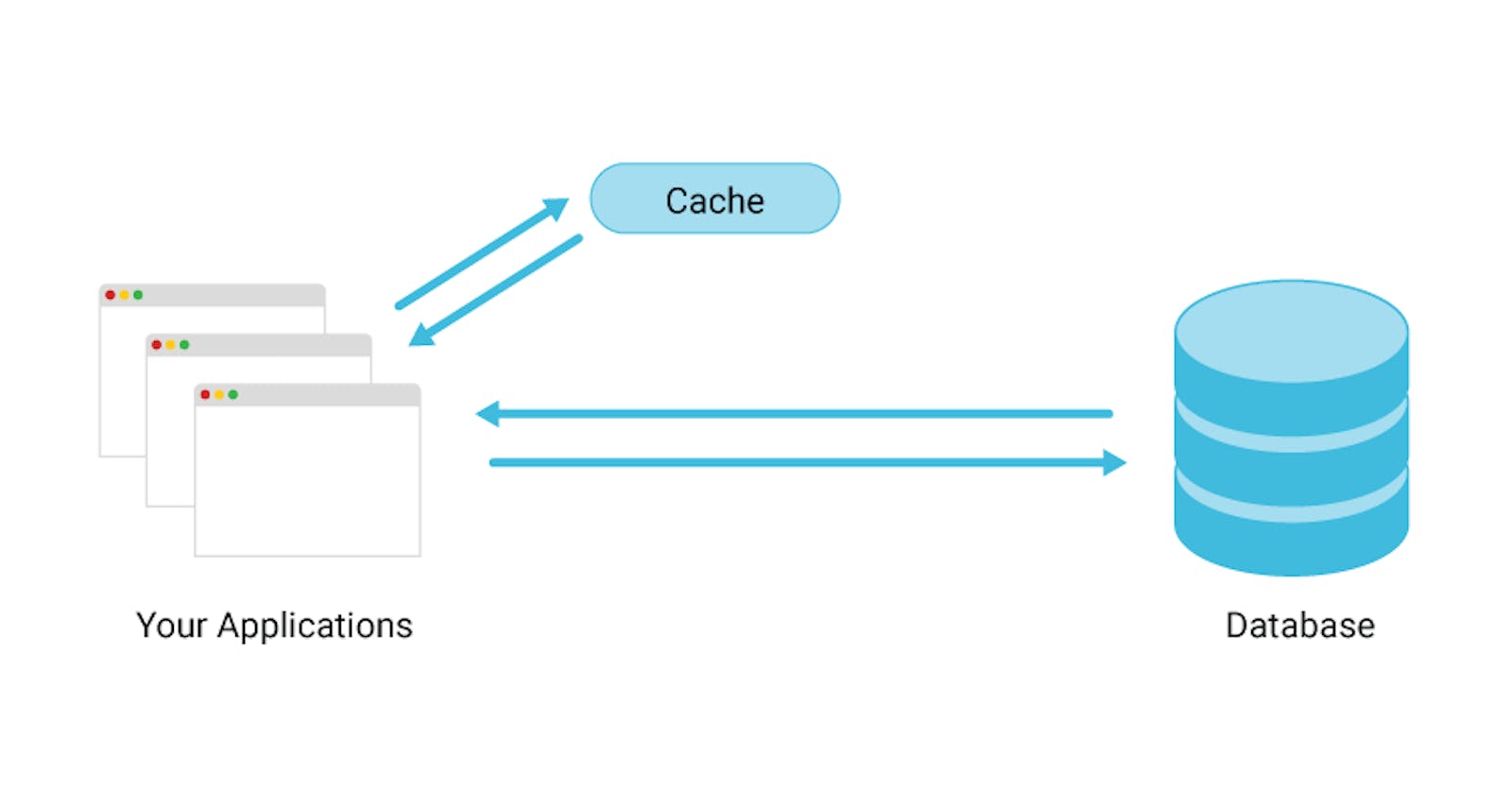

In the world of computers and web development, caching is like that express lane for your data. It's the art of stashing frequently used or hard-to-calculate stuff in a nearby treasure chest, ready to be grabbed at a moment's notice. It's the tech version of keeping your coffee beans right next to the grinder – no waiting, just pure sips of efficiency.

Think of caching as your virtual butler, handing you things you love without making you wait. It's the magic trick that tucks away copies of web goodies like HTML pages, images, and even those pesky database results. By keeping these gems close to you, caching makes your online experiences smoother, your load times snappier, and your users happier.

Why fetch things from the other side of the room when you've got a copy right here, ready to roll? Caching turns data fetching into a wink-and-nod exchange, making websites and applications faster than a caffeinated cheetah. So, let's dive into this cache of wisdom, uncover its secrets, and sprinkle a bit of "quick and clever" on our digital escapades. Time to make your web interactions as seamless as snagging that coveted coffee.

Caching isn't just a tech buzzword; it's the knight in shining armor for your website's performance and your users' happiness. Here's the scoop:

Speed: The Flash of the Web World

Speed isn't just a vanity metric; it's the lifeblood of user satisfaction. Picture this: a user clicks on your website link, eager to explore. But if your site takes ages to load, their excitement turns into frustration, and the "Back" button becomes irresistible.

Caching swoops in as your digital speedster. By storing frequently used data right at the virtual doorstep, caching eliminates the need to fetch everything from scratch. It's like having your treasure map already spread out, guiding users swiftly to what they're seeking. Your website becomes a speed demon, satisfying visitors and reducing bounce rates.

User Experience: More Than Just a Good First Impression

In the digital realm, first impressions are everything. A slow-loading website isn't just a minor inconvenience; it's a recipe for disaster. Users today have the attention span of a caffeinated squirrel – if your site doesn't impress in seconds, they're gone.

Caching comes to the rescue, ensuring that the first impression is a lasting one. With lightning-fast load times, visitors experience instant gratification. Images appear like magic, and content materializes without delay. It's the equivalent of revealing the treasure as soon as the X marks the spot.

Reduced Server Load: The Sigh of Relief for Your Server

Your website's server is the unsung hero working tirelessly in the background. But when too many users storm in at once, it can feel like the wild stampede at a Black Friday sale. This can lead to crashes, slow responses, and a frustrating experience for everyone.

Caching steps in as the crowd control expert. By serving cached content, it reduces the server's workload, allowing it to cater to a larger audience without breaking a sweat. Your server can now relax, confident that caching has your back.

In a nutshell, caching is your trusty companion, enhancing your website's performance, delighting users, and lightening the load on your server. It's the difference between a thrilling treasure hunt and a tiring wild goose chase.

As we delve deeper into this journey of caching, be prepared you'll learn from the very basics to real-world implementation. From understanding the fundamental concepts to mastering practical strategies, this article aims to equip you with the tools to harness caching's potential. Whether you're a code enthusiast or a curious explorer, we're embarking on a voyage that demystifies caching and empowers you to apply it effectively.

speed isn't just a luxury – it's the currency of user satisfaction.

Optimizing Resources For Efficiency

Consider this, when you're packing for a journey, you strategically choose what to carry based on necessity. You wouldn't lug around a piano if you're headed for a weekend getaway, right? Similarly, websites and applications must optimize their resource usage to ensure swift, seamless experiences.

Caching steps in as the resource optimization maestro. By caching data, you're essentially creating a handy cache of frequently used information. This minimizes the need to constantly fetch data from the source, reducing the strain on servers and networks. It's like having a digital assistant who knows exactly where to find things, so you don't have to go on a wild goose chase every time.

Now, picture a well-orchestrated ballet, where dancers move in perfect harmony, effortlessly navigating the stage. Caching, in the world of digital performance, is that graceful dancer. By reducing data retrieval times and optimizing resource usage, caching ensures that your website's performance is a seamless, captivating experience.

Let's see real-world scenarios where effective caching works its magic:

Online Shopping, Zero Wait: During a sale rush, caching ensures that product pages load in a flash, allowing customers to swiftly grab their desired items without facing frustrating delays.

Swift Transactions: In the world of online banking and transactions, caching ensures quick access to account details and history, providing a secure and smooth financial journey.

News and Information, Now: When it comes to consuming news, caching keeps you engaged by instantly loading popular articles, ensuring you're up to date without any interruptions.

Social Media Delight: Caching makes social media an enjoyable experience, with posts, images, and user profiles loading instantly, encouraging longer and more interactive sessions.

Buffer-Free Streaming: Video streaming becomes a joy as caching reduces buffering times, allowing you to indulge in uninterrupted entertainment.

caching isn't a one-size-fits-all solution – it's a palette of techniques, each with its hues and strokes. Let's explore the spectrum of caching types, weigh their pros and cons, and discover when each type emerges as the shining star.

1. Browser Caching: Storing Locally for Speed

Browser caching is your browser's knack for saving copies of resources from websites you've visited. These resources include images, stylesheets, scripts, and even entire web pages. When you revisit a site, your browser can fetch these resources from its local cache, speeding up load times.

Pros: Accelerates load times for returning visitors, reduces server load, and conserves bandwidth by minimizing redundant downloads.

Cons: This may lead to outdated content if not managed correctly; doesn't benefit first-time visitors.

When to Use: Ideal for content that doesn't change frequently, such as images, stylesheets, and scripts. Perfect for static websites and resources shared across multiple pages.

2. Server-Side Caching: Enchantment in Processing

Server-side caching involves generating and storing pre-rendered versions of web pages or components. These pre-built versions can be served to users swiftly, minimizing server load and reducing data processing time.

Pros: Dramatically boosts performance by delivering pre-rendered content; reduces server load and enhances scalability.

Cons: Dynamic content may require frequent updates; may not suit scenarios where content changes rapidly.

When to Use: Effective for content that doesn't change rapidly, such as blog posts, product listings, and news articles.

3. Content Delivery Networks (CDNs): Global Performance Magic

Explanation: CDNs are like magical couriers for your content. They distribute copies of your website's assets (images, scripts, videos, etc.) to servers in multiple locations worldwide. When a user accesses your site, the content is fetched from the nearest server, reducing latency and load times.

Pros: Drastically improves load times for users across the globe; enhances website reliability and security.

Cons: Requires a subscription or additional cost; doesn't provide as much control over caching rules.

When to Use: Perfect for websites with international audiences, e-commerce platforms, and media-heavy sites with rich content.

Each caching type has its strengths and weaknesses, making it suitable for specific scenarios. keeping these caching techniques in your toolkit will do you loads of good.

There are an array of strategies, each move designed to outwit the opponent of slow load times, understand their mechanics, and see the scenarios where each strategy triumphs.

1. Expiration-Based Caching: Time as the Decider

Imagine a treasure map that's only valid for a limited time. Expiration-based caching works similarly – cached content is considered valid for a certain duration. Once that time elapses, the cache is cleared, ensuring users receive the latest version.

Efficiency: This strategy is like a fresh breeze – it guarantees users access to up-to-date content by frequently refreshing the cache. However, it comes with a trade-off: if the content doesn't change often, you might waste resources updating the cache needlessly.

Scenarios: Perfect for frequently updated content like news articles, stock prices, and event listings.

2. Validation-Based Caching: The Last Word from the Source

Imagine a bouncer at an exclusive club, verifying your credentials before granting entry. Validation-based caching works similarly – it checks with the source server to see if cached content is still valid before delivering it to users.

Efficiency: This strategy ensures accuracy by confirming the content's authenticity before presenting it to users. However, it requires communication with the source server, potentially adding a slight delay in serving the content.

Scenarios: Ideal for content that changes infrequently but needs to be accurate, like product descriptions or contact information.

3. Least Recently Used (LRU) Caching: Letting Go of the Past

Imagine a library where the books you haven't touched in ages are the first to go when space gets tight. LRU caching follows a similar principle – the least recently accessed content gets kicked out of the cache when new content needs space.

Efficiency: LRU caching optimizes cache space by prioritizing recently accessed content. However, if a rarely accessed item is vital, it might be evicted prematurely.

Scenarios: Well-suited for scenarios where users frequently access a limited set of content, such as trending products on an e-commerce site.

Each caching strategy is like a specialized maneuver in the grand optimization symphony. Just like a chess player knows which piece to move when understanding these strategies empowers you to orchestrate efficient caching and deliver seamless experiences.

Caching Implementation: Step-by-Step Guide, Tools, and Code Examples

Assess Your Application: Determine which parts of your application would benefit from caching – whether it's frequently accessed pages, database queries, or static assets.

Select a Caching Strategy: Choose between expiration-based, validation-based, or other strategies based on your content's update frequency and accuracy needs.

Choose a Caching Tool: Select a caching tool or system that aligns with your needs. Popular choices include Redis, Memcached, and Varnish.

Integrate Caching Logic: Integrate caching logic into your application's code. This might involve checking the cache before fetching data or updating the cache after data changes.

Set Cache Expiry or Validation Rules: Configure your chosen caching tool to set cache expiration times or validation rules, ensuring cached content remains accurate and up to date.

Implement Cache Invalidation: Handle cache invalidation, ensuring that cached content is refreshed when it becomes outdated. This might involve triggering cache updates when data changes.

Test and Monitor: Rigorously test your caching implementation across different scenarios to ensure it functions as expected. Monitor cache hits and misses to fine-tune your strategy.

Some simple code examples of caching implementation in different programming languages. Let's use a basic scenario where we want to cache the results of a function that calculates the square of a given number.

- 1. Python (Using

functools.lru_cache):

from functools import lru_cache

@lru_cache(maxsize=5) # Caches up to 5 most recent results

def calculate_square(n):

print(f"Calculating square of {n}")

return n * n

print(calculate_square(5))

print(calculate_square(3)) # This result will be cached

print(calculate_square(5)) # This result will be retrieved from cache

- Java (Using

java.util.HashMap):

import java.util.HashMap;

import java.util.Map;

public class CacheExample {

private Map<Integer, Integer> cache = new HashMap<>();

public int calculateSquare(int n) {

if (cache.containsKey(n)) {

System.out.println("Retrieving from cache: " + n);

return cache.get(n);

}

int result = n * n;

cache.put(n, result);

System.out.println("Calculating square of " + n);

return result;

}

public static void main(String[] args) {

CacheExample cacheExample = new CacheExample();

System.out.println(cacheExample.calculateSquare(5));

System.out.println(cacheExample.calculateSquare(3)); // This result will be cached

System.out.println(cacheExample.calculateSquare(5)); // This result will be retrieved from cache

}

}

- JavaScript (Using a simple object):

const cache = {};

function calculateSquare(n) {

if (cache.hasOwnProperty(n)) {

console.log("Retrieving from cache:", n);

return cache[n];

}

const result = n * n;

cache[n] = result;

console.log("Calculating square of", n);

return result;

}

console.log(calculateSquare(5));

console.log(calculateSquare(3)); // This result will be cached

console.log(calculateSquare(5)); // This result will be retrieved from cache

- PHP using the built-in

Memcachedextension:

<?php

// Initialize Memcached

$memcached = new Memcached();

$memcached->addServer('localhost', 11211);

function calculateSquare($n) {

global $memcached;

$cachedResult = $memcached->get($n);

if ($cachedResult !== false) {

echo "Retrieving from cache: $n\n";

return $cachedResult;

}

$result = $n * $n;

$memcached->set($n, $result);

echo "Calculating square of $n\n";

return $result;

}

echo calculateSquare(5) . "\n";

echo calculateSquare(3); // This result will be cached

echo calculateSquare(5); // This result will be retrieved from cache

?>

In this example, we're using the

Memcachedextension to store and retrieve cached results. Make sure you have theMemcachedextension enabled in your PHP configuration and have aMemcachedserver running onlocalhostwith port11211.

these examples demonstrate basic caching implementations. In real-world scenarios, you will use more advanced caching libraries or frameworks depending on your application's requirements. Also, you might want to handle connection errors, set expiration times for cached items.

Caching Tools, Libraries, and Frameworks

Redis: An advanced key-value store that supports various data structures. It's commonly used for caching due to its speed and versatility.

Memcached: A simple, distributed caching system that stores data in memory, ideal for speeding up database queries.

Varnish: A web application accelerator that serves as a reverse proxy cache, drastically improving page load times.

Cache Expiration and Challenges:

Cache expiration refers to the process of removing or updating cached data after a certain period has passed. It's crucial to prevent stale or outdated data from being served to users. Cache expiration helps ensure that the cached content remains relevant and accurate.

You might be faced with several Challenges with cache expiration some could be Stale Content, Resource Utilization, Balance Between Freshness and Performance, and Inconsistent Expiry Across Layers.

Techniques for Cache Invalidation:

Cache invalidation is the process of removing or updating cached data when it becomes stale or irrelevant. There are several techniques to handle cache invalidation:

Manual Invalidation: Developers explicitly remove or update cache entries when they know the data has changed. This is precise but might be error-prone and can lead to missed invalidations.

Time-Based Invalidation: Cache entries are set to expire after a predetermined time interval. While simple, this can result in serving slightly outdated data and might not be suitable for data that changes frequently.

Event-Driven Invalidation: Cache entries are invalidated based on events, such as data updates or changes. This requires a mechanism to trigger cache invalidation when relevant data changes occur.

Versioning: Each cache entry is associated with a version number. When data changes, the version number is updated, and clients request data using the new version number.

TTL Extension: When data is accessed from the cache, the cache's Time-To-Live (TTL) is extended if the data is still relevant. This reduces cache misses and improves freshness.

Handling Cache Expiration Gracefully:

To avoid issues related to stale content, it's important to handle cache expiration gracefully:

Graceful Degradation: When cached content expires, ensure that the application gracefully degrades to retrieve and serve the latest data from the source.

Asynchronous Updates: Use asynchronous techniques to update the cache after serving the cached content. This reduces the impact of cache updates on response times.

Preloading: Proactively refresh the cache with new data before it expires. This ensures minimal downtime between cache expiration and refresh.

Fallback Mechanisms: If cached data is unavailable, have a fallback mechanism to retrieve data from the source and avoid service disruptions.

Cache Metrics and Monitoring: Monitor cache hit rates, miss rates, and expiration rates to identify potential issues and adjust cache strategies accordingly.

Cache expiration and invalidation are critical aspects of maintaining a responsive and accurate application. Choosing the right cache expiration strategy and implementing effective cache invalidation techniques are key to balancing performance and data freshness while avoiding potential pitfalls associated with stale content.

Common Mistakes in Caching Implementation:

Over-caching or Under-caching

Lack of Cache Invalidation

Ignoring Cache Expiry

Inconsistent Keys

Not Considering Cache Lifetime

Caching Dynamic Content

Bypassing Cache Logic

Ignoring Cache Monitoring

No Fallback Mechanism

Caching Everything

To mitigate these issues and mistakes:

Plan Cache Strategy: Define a clear caching strategy based on your application's needs and data usage patterns.

Choose the Right Cache: Use appropriate caching solutions (e.g., in-memory cache, content delivery network) for different types of data.

Set Clear Expiry Times: Choose reasonable cache expiration times to balance freshness and performance.

Implement Invalidation: Implement proper cache invalidation mechanisms to keep data up-to-date.

Test Thoroughly: Test caching strategies thoroughly in various scenarios to identify potential issues.

Monitor and Optimize: Continuously monitor cache performance and adjust caching strategies as needed.

Caching is a powerful tool when used correctly, but it requires thoughtful planning and implementation to avoid the pitfalls associated with incorrect or ineffective caching practices.

The key to being successful with any technology trend is to stay up-to-date, agile, and open-minded - some emerging technologies and trends in caching include:

HTTP/2 and HTTP/3: Newer versions of the HTTP protocol, such as HTTP/2 and HTTP/3, come with improved performance and multiplexing capabilities. These protocols enable more efficient use of connections, reducing the need for multiple requests and potentially impacting caching strategies.

Edge Caching and CDNs: Content Delivery Networks (CDNs) are evolving to provide more sophisticated edge caching solutions. Edge caches bring data closer to the user, reducing latency and improving content delivery speed.

Serverless Architecture: Serverless computing abstracts server management, allowing developers to focus on code. While serverless architectures might change caching dynamics, they also introduce opportunities for optimizing caching strategies in event-driven systems.

Distributed and Microservices Architectures: As applications move towards distributed and microservices architectures, caching strategies need to adapt to the new challenges of data consistency, synchronization, and cache invalidation across multiple services.

Machine Learning-Powered Caching: Machine learning algorithms can predict user behavior and cache content proactively. This approach optimizes cache hit rates by preloading data that is likely to be requested.

Content-Aware Caching: Caches are becoming more intelligent by understanding the content they store. This allows for smarter cache eviction policies and more targeted cache utilization.

Real-Time Data Streaming: Caching strategies are being reimagined in scenarios involving real-time data streaming, where data is continuously generated and needs to be cached efficiently.

In-Memory Computing: In-memory databases and data stores are becoming more prevalent, offering extremely fast data retrieval by keeping data in RAM. This impacts how caching is employed in modern applications.

Blockchain and Distributed Ledgers: For decentralized applications, caching within the blockchain and distributed ledger systems presents unique challenges and opportunities.

As new technologies emerge and application architectures evolve, caching strategies need to be revisited and adjusted to ensure optimal performance and user experience.

In the world of web development, where speed and seamless user experiences are paramount, caching emerges as the unsung hero that can transform your digital interactions. Just as a shortcut in a busy coffee shop gets you your beloved brew faster, caching is the express lane for your data, offering efficiency, speed, and a smoother ride through the digital landscape.

Throughout this journey, we've delved into the art of caching, from understanding its fundamental concepts to exploring various caching types and strategies. We've witnessed how caching can accelerate load times, enhance user experiences, and relieve the strain on servers. With examples in hand, we've seen how caching can be implemented across different programming languages, keeping your applications agile and responsive.

But beyond the technicalities, caching is a strategic approach that empowers your websites and applications to shine. It's not just about serving content faster; it's about crafting an online environment where users feel valued and engaged. As the digital world continues to evolve with new technologies, architectures, and trends, caching remains a timeless tool that adapts and evolves with the times.

Now that you are armed with knowledge, it's time to embark on your caching adventure. Start by assessing your application's needs, selecting the appropriate caching strategy, and integrating caching logic seamlessly. Choose the right tools and frameworks that align with your goals and remember that while caching can offer incredible benefits, it requires thoughtful planning and monitoring to avoid common pitfalls.

So, whether you're a seasoned developer seeking to optimize performance or an enthusiastic explorer eager to enhance user experiences, caching is your trusty companion on this journey. Implement caching with care, embrace the speed, and watch as your digital world becomes a place where information flows effortlessly, just like sipping that perfect cup of coffee without the wait. It's time to unlock the potential of caching and make your mark on the world of lightning-fast web experiences.